MySQL SQOOP Import Fails on HDP 2.3

Unknown

It Looks like SQOOP-1400 error has not been resolved with HDP 2.3 Distribution which comes with SQOOP 1.4.6

I try to run a SQOOP MySQL import and got the exact same error.

Looking under the covers RHEL 6.7 and CentOS 6.7 i see the same problem persists

The Sqoop Config is at /usr/hdp/current/sqoop-server/lib

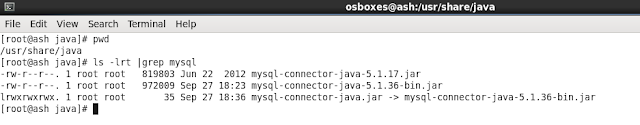

The jar points to a symbolic link in /user/share/java

So Now to the important question of How to fix it.

Step 1 - Download the mysql connector.The current platform independant version is 5.1.36

https://dev.mysql.com/downloads/connector/j/

Step 2 - untar the file and copy to /usr/share/java

Re-point the Soft link that points to the older version

[root@ash java]# ln -nsf mysql-connector-java-5.1.36-bin.jar mysql-connector-java.jar

Your mySQL Sqoop Import should work fine now.

I still would like to know if i missed something in the installation or configuration , since the JIRA seems to be resolved and fixed.

12:15 PM

HDP

,

SQOOP;SQOOP-1400

TOAD for Hortonworks 2.3

Unknown

TOAD is a tool that most of the folks who have done data analysis and Database development are familiar with.

With the advent of Big Data Technologies , some amount of analysis has shifted to the Hadoop space.

So i was really excited when Toad for Hadoop was available for download. For a data Analyst , this meant, not having to worry about where he is connecting to (Relational Oracle DB Vs Hive Hadoop Metastore) . Use the familiar TOAD interface to run SQLs (or HQLs for the puritans) against Hadoop.

The environment i have is a Hortonworks HDP 2.3 platform on CentOS 6.7. Officially TOAD doesnot support HDP , but Hortonworks being a pure Hadoop platform ,with no customizations , it should work nevertheless.

Brad Wulf (@bradwulf) has a detailed post about how to set up Toad against HDP

http://www.toadworld.com/products/toad-for-hadoop/b/weblog/archive/2015/07/24/toad-for-hadoop-does-39-nt-officially-support-hortonworks-here-39-s-how-to-connect-to-it

but i intend to go one level detail checking every config and ports to make sure what to look for in case you get an error, which is pretty much what i had to go through to get this up and running

Step 1 : Verify your Environment

Step 2 : Ensure Cluster is up & Running by launching Ambari. You may do this from the same machine you are running toad. This will ensure that your cluster is accessible from your machine and no firewall issues exist. (Hint - Check the resource manager and Job Tracker UI's as well in 8088 and 19888 ports)

Step 3 : Verify the Resource Manager Port. This is the only difference in setting while configuring the TOAD ports . The default configuration sometimes picks the port as 8032 , vs 8088 which is default for Hortonworks.This can be verified from Ambari. Go to YARN Service > Configurations >Advanced yarn-site > yarn.resourcemanager.webapp.address .

Once you get all the green checks, you are ready to roll.

set hive.execution.engine=mr;

set hive.execution.engine=tez;

I love the intellisense help that TOAD gives me.

Happy HQL’ing :)

Will play with the data transfer next and post my notes.

With the advent of Big Data Technologies , some amount of analysis has shifted to the Hadoop space.

So i was really excited when Toad for Hadoop was available for download. For a data Analyst , this meant, not having to worry about where he is connecting to (Relational Oracle DB Vs Hive Hadoop Metastore) . Use the familiar TOAD interface to run SQLs (or HQLs for the puritans) against Hadoop.

The environment i have is a Hortonworks HDP 2.3 platform on CentOS 6.7. Officially TOAD doesnot support HDP , but Hortonworks being a pure Hadoop platform ,with no customizations , it should work nevertheless.

Brad Wulf (@bradwulf) has a detailed post about how to set up Toad against HDP

http://www.toadworld.com/products/toad-for-hadoop/b/weblog/archive/2015/07/24/toad-for-hadoop-does-39-nt-officially-support-hortonworks-here-39-s-how-to-connect-to-it

but i intend to go one level detail checking every config and ports to make sure what to look for in case you get an error, which is pretty much what i had to go through to get this up and running

Step 1 : Verify your Environment

Step 2 : Ensure Cluster is up & Running by launching Ambari. You may do this from the same machine you are running toad. This will ensure that your cluster is accessible from your machine and no firewall issues exist. (Hint - Check the resource manager and Job Tracker UI's as well in 8088 and 19888 ports)

Step 3 : Verify the Resource Manager Port. This is the only difference in setting while configuring the TOAD ports . The default configuration sometimes picks the port as 8032 , vs 8088 which is default for Hortonworks.This can be verified from Ambari. Go to YARN Service > Configurations >Advanced yarn-site > yarn.resourcemanager.webapp.address .

Step 4 - Launch the Toad Application and go through the configuration Steps

You can set the execution engine to Map Reduce or Tez using the command. This shall be at a session level and willoverride what is set in Ambari. With tez engine proven to run faster in many cases , i use it by default and do see a difference in elapsed time. On the right hand side you can see the proprty of each query and the mode it was executed on , among other properties.

set hive.execution.engine=mr;

set hive.execution.engine=tez;

I love the intellisense help that TOAD gives me.

Happy HQL’ing :)

Will play with the data transfer next and post my notes.

1:27 PM

HDP

,

Hive

,

HOrtonWorks

,

TOAD

My CBIP Experience

Unknown

I got the opportunity to attend

the TDWI in Chicago in May 2015. End of March (roughly 45 days prior) is when

the plan was finalized and that’s when I decided to jump on the CBIP bandwagon.

Motivation

Status Quo. Yes this was my

biggest motivation. Our Daily jobs keep us pretty busy doing what we know best

and trying to do it faster and quicker. Once you have spent a few years doing

the same technology, you tend to get good at it. It puts you in a false sense

of security. Also, trying to meet deadlines and making personal time, the thing

you give up most is personal development.

For me it was a self-evaluation

process to see where I stand, and if i can commit myself to learn an pass an

exam.

Why CBIP

TWO WORDS -Technology Agnostic.

There is an endless list of tool

based certification in the Data warehousing /Business Intelligence field

offered by industry leaders like Microsoft, IBM, and Informatica. Every Certification

has its merits. The CBIP Certification does not focus on any specific tool, but

the fundamentals of BI and DW along with the IS Core basics. In my opinion,

this would make it better if I am a hiring manager knowing that the candidate

has strong fundamentals and quickly pick up on any tool thrown at him/her.

Without much ado, I will go into

what it took to get the certification and what it meant for me.

Preparation Time

Depends. Really :)

If your background is BI and DW

with a few years under your belt, I would say 6- 8 weeks at 7-8 hrs per

week. What I would suggest is spend a

week going through the syllabus in TDWI Website, try to google the topics and

see if you feel comfortable. Each exams has 6 to 7 main topics, if you are

familiar with them then it’s safe to say that with some prep work you will be

able to make it. If it seems very alien then you want to spent more time on

that. Well that’s true for any exam isn’t it :)

Preparation Material

CBIP Exam Guide - Absolute must. Sets the context and topics. This

is given for free if you attend TDWI and CBIP Exam Preparation Session,

generally on Day 1 .However, if you plan to give the exam during a TDWI Conference, order it prior, it costs you 125 $.

IS Core - Hardest exam of all the 3 simply because the subject is

too broad to “Study”. I strongly

recommend investing in the study material offered by DAMA for IS Core. This

gives you a concise guide of the topics and sets boundaries. If you want to

dive deep into any specific topic, google is your friend.

I started off on my own without

the study guide but soon realized that topic is too vast to really

"study" from an exam standpoint.

Data Warehousing Core - I found this exam to be pretty straight

forward. I would think everyone who writes CBIP would feel the same way, since

the intended audience definitely have a DW Background. Skimmed through the Data

warehousing Toolkit by Kimball. There are lot of keywords in that book that you

should be familiar with. Fact of the matter is this is an exam. There are lot

of DW concepts that you practice, but you don’t use the

"Terminology". This book will familiarize you with all of them.

Business Intelligence Analytics – Specialty Exam. I had hoped this

would be an easy topic for me, but I was wrong. I really had to spent time

preparing for this. Lot of concepts on Statistics, Analytics etc.

Books for Reference

Business Intelligence: The Savvy

Manager's Guide by Morgan Kaufmann

Quantitative Methods for Business

David R Anderson

The preparation for Analytics

exam took me to Wikipedia countless times - Reading and understanding ton of

statistical terms.

CBIP Exam preparation Class in TDWI - This would be a good

finishing touch to your study. Typically on Day 1 of any TDWI Conference, gives

you a game day prep.

Taking the Exam

You have proctored option to do

this offsite or doing it as part of TDWI Conference. I chose the latter, Reason

1 being the exams were 50 $ cheaper, the 2nd being - in the scenario that you don’t

clear you get an extra attempt for one exam. This is a feel good cushion. Lastly

the CBIP class I mentioned before, it does really help you judge where you are.

Finally some blogs that gave me

good ideas. Thank You for your contributions:

My Final Thoughts

Personally, the exam preparation

helped me understand what I do not know .It was humbling to say the least. Gave

me a better perspective of the BI Landscape and helped me delve into and read

about topics that I knew never existed to even look for.

I know lot of people would ask -

would this certification guarantee a job. I don't know. However, I

am sure it would help you differentiate, and at the least take your resume to the

top of the pile. Also all the learning would make you better articulate BI and

may be make you appear a little smarter :)

PS - I will not discuss any

specific questions as that would be against the policy.

5:02 AM

Business Intelligence

,

CBIP

,

Data Warehousing

,

TDWI

Hello

Unknown

Have been thinking for a long time to set up a blog where i can share what i have learned in my tech world and interact with like minded folks in the community. So better late than never , as I start my journey into the Hadoop world ( technically a few months back .. oh well!)

Coming from a warehousing BI background ,with SQL Drilled into your brain , it is a bit unnerving to step into the open source world.

Through this blog i hope to document what i learn as i move forward and share my thoughts.

Coming from a warehousing BI background ,with SQL Drilled into your brain , it is a bit unnerving to step into the open source world.

Through this blog i hope to document what i learn as i move forward and share my thoughts.

Subscribe to:

Comments

(

Atom

)