MySQL SQOOP Import Fails on HDP 2.3

It Looks like SQOOP-1400 error has not been resolved with HDP 2.3 Distribution which comes with SQOOP 1.4.6

I try to run a SQOOP MySQL import and got the exact same error.

Looking under the covers RHEL 6.7 and CentOS 6.7 i see the same problem persists

The Sqoop Config is at /usr/hdp/current/sqoop-server/lib

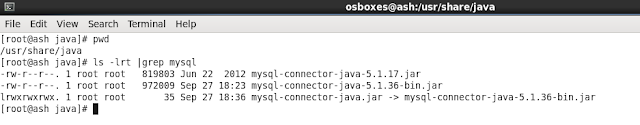

The jar points to a symbolic link in /user/share/java

So Now to the important question of How to fix it.

Step 1 - Download the mysql connector.The current platform independant version is 5.1.36

https://dev.mysql.com/downloads/connector/j/

Step 2 - untar the file and copy to /usr/share/java

Re-point the Soft link that points to the older version

[root@ash java]# ln -nsf mysql-connector-java-5.1.36-bin.jar mysql-connector-java.jar

Your mySQL Sqoop Import should work fine now.

I still would like to know if i missed something in the installation or configuration , since the JIRA seems to be resolved and fixed.

TOAD for Hortonworks 2.3

TOAD is a tool that most of the folks who have done data analysis and Database development are familiar with.With the advent of Big Data Technologies , some amount of analysis has shifted to the Hadoop space.

So i was really excited when Toad for Hadoop was available for download. For a data Analyst , this meant, not having to worry about where he is connecting to (Relational Oracle DB Vs Hive Hadoop Metastore) . Use the familiar TOAD interface to run SQLs (or HQLs for the puritans) against Hadoop.

The environment i have is a Hortonworks HDP 2.3 platform on CentOS 6.7. Officially TOAD doesnot support HDP , but Hortonworks being a pure Hadoop platform ,with no customizations , it should work nevertheless.

Brad Wulf (@bradwulf) has a detailed post about how to set up Toad against HDP

http://www.toadworld.com/products/toad-for-hadoop/b/weblog/archive/2015/07/24/toad-for-hadoop-does-39-nt-officially-support-hortonworks-here-39-s-how-to-connect-to-it

but i intend to go one level detail checking every config and ports to make sure what to look for in case you get an error, which is pretty much what i had to go through to get this up and running

Step 1 : Verify your Environment

Step 2 : Ensure Cluster is up & Running by launching Ambari. You may do this from the same machine you are running toad. This will ensure that your cluster is accessible from your machine and no firewall issues exist. (Hint - Check the resource manager and Job Tracker UI's as well in 8088 and 19888 ports)

Step 3 : Verify the Resource Manager Port. This is the only difference in setting while configuring the TOAD ports . The default configuration sometimes picks the port as 8032 , vs 8088 which is default for Hortonworks.This can be verified from Ambari. Go to YARN Service > Configurations >Advanced yarn-site > yarn.resourcemanager.webapp.address .

Step 4 - Launch the Toad Application and go through the configuration Steps

You can set the execution engine to Map Reduce or Tez using the command. This shall be at a session level and willoverride what is set in Ambari. With tez engine proven to run faster in many cases , i use it by default and do see a difference in elapsed time. On the right hand side you can see the proprty of each query and the mode it was executed on , among other properties.

set hive.execution.engine=mr;

set hive.execution.engine=tez;

I love the intellisense help that TOAD gives me.

Happy HQL’ing :)

Will play with the data transfer next and post my notes.

Subscribe to:

Comments

(

Atom

)

No comments :

Post a Comment